Many clients today have models that run well on smaller core counts, or maybe license bound to a particular core count. Due to the large amount of CPUS available in a single system (56 or more), clients have expressed interest in “stacking” multiple smaller jobs on a single server to increase throughput.

Modern HPC system building blocks based on 3rd Generation Intel Xeon Scalable Processors have high memory bandwidth per core, and improved AVX512 and Turbo options that have made stacking less of a performance penalty than in the past.

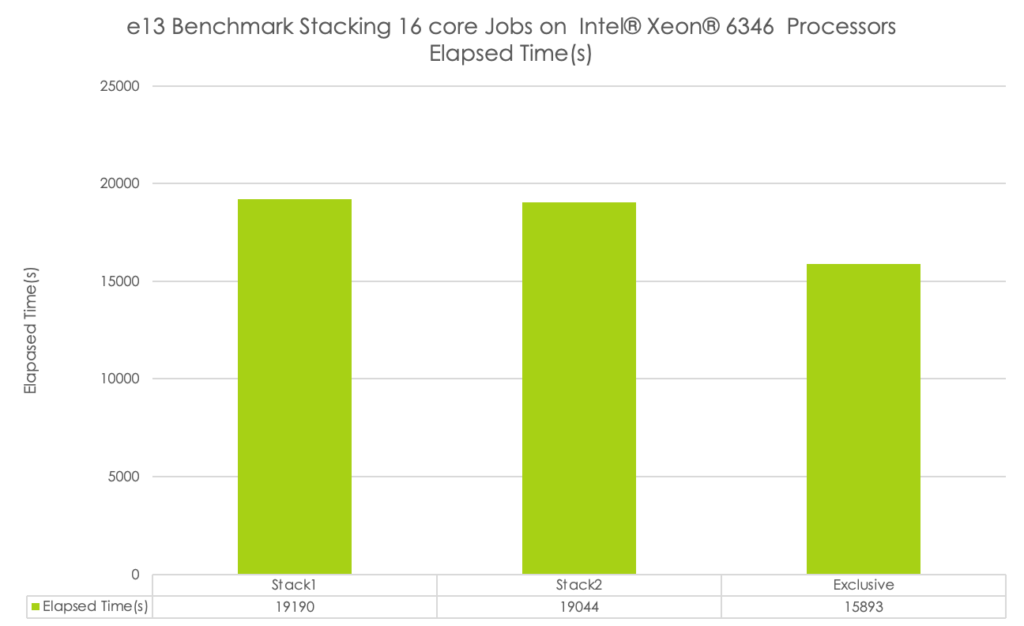

To see the result of stacking on the latest 3rd Generation Intel Xeon Scalable Processors, we used the Abaqus e13 benchmark on an Intel 6346 based node that has 32 total cores per server. The baseline run was at 16 cores Exclusively (out of 32, which benefits from turbo effects), and then “stacked” two jobs on the same node, consuming all 32 cores (two 16-core jobs).

From the results below, you can see that one can stack two identical jobs with a modest drop in overall single node job time, but at a higher throughput. If you need the absolute fastest job time for a fixed token cost, then running exclusive is the best, but stacking jobs will give you more throughput overall for small jobs.

By default, the TotalCAE Platform disables job stacking and runs jobs in “Exclusive” mode, which means even if you use 1 CPU, you get the whole machine to yourself. This ensures your job runs at peak performance, and that other jobs don’t interfere with your job in some negative way, for example running the machine out of memory or otherwise impacting your job. To disable this default, the user can just uncheck the Exclusive box on job submission, or use the -x option in our command line tsubmit, to allow jobs to “stack” on the same node and experiment with how it works for your particular model.

To learn more about how TotalCAE features for Abaqus check out our Abaqus Blueprint Registration at https://www.totalcae.com/abaqus.php